Are CTE the same as GGP?

Are CTE the same as GGP?

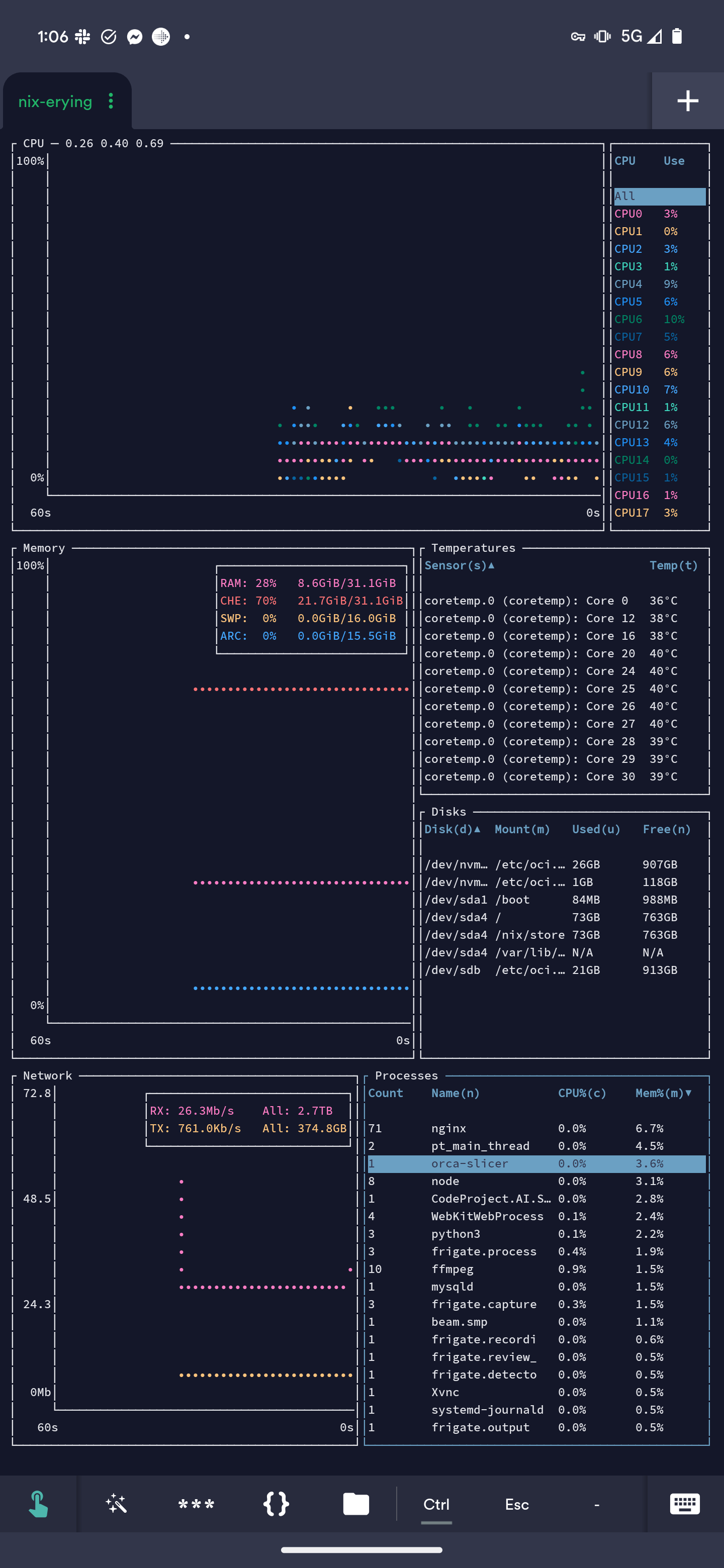

Is Orca that resource intensive? I’m running it in a container with KasmVNC and have never really checked out the resource usage. Admittedly it’s on one of my local servers in another room. I guess it’s how large your projects are too.

Edit: maybe it’s just my small projects

They better stay away from .mom domains

It was even ported to the original Xbox. I remember the total games file size being incredibly small - compared to most other titles on that system.

Ehhhh, mental outlaw made a video on just that topic a few hours ago.

TLDW: people didn’t update packages for illegal tings, glowys rolled up.

Let’s me repurpose an old phone for some headless container funtimes. If I can infect it with NixOS I’ll be golden.

Mikrotik are really aimed at advanced users, ubiquiti brand themselves as prosumer products. I found the Ubiquiti interface a complete mess - but it could just be me.

If it can run OpenWRT I’d suggest taking that path (if you like to tinker / the device supports it). My Google WiFi hubs are still humming away after all these years - now with way more features and a usable interface!

“You see, killbots have a preset kill limit. Knowing their weakness, I sent wave after wave of my own men at them until they reached their limit and shut down.”

Dear god, I do vaguely remember their launch (not my portfolio while working in PC component procurement) but had completely pushed that from my head.

Looks like LG have the same thing going on too, what a waste of silicon.

You have wifi / ethernet in your PC monitor?

I run my 4800hs / 1650 laptop in hybrid mode, it’s ‘OK’ on battery but I bought it used and it’s seen some usage (70% health) in its past life. I’ve been using hyprland for maybe 6 months now and support has only improved with time.

You will run into issues with specific multiplayer titles in a VM, depending on what you plan on playing having a 2nd partition might be the way to go. Some titles won’t care being in a VM, some will flat-out refuse to work or kick you after some time, many are compatible out of the box under Linux / Proton.

Supergfxctl is a handy tool for toggling your dedicated card, my laptop is ASUS so I can’t be certain on the level of compatibility you may see with said tool.

Currently all iOS browsers are safari with custom interfaces over-the-top.

The volumes of cash that Microsoft throw at retailers (custom builders / big box) is astronomical. Worked for a relatively small retailer with some international buying power. EOFY “MDF” from Microsoft was an absurd figure.

Our builders would belt out 3 - 6 machines per day, depending on complexity of the custom build, the pre-built machines were in the 6+ per day range.

Considering the vast majority of those machines were running windows (some sold without an os), from a quick estimate after too many beers we were out of pocket 10% at most of the bulk buy price for licence keys after our “market development funds” came through.

It’s fucking crook.

deleted by creator

I’ve not tested the method linked but yeah it would seem like it’s possible via this method.

My lone VM doesn’t need a connection to those drives so I’ve not had a point to.

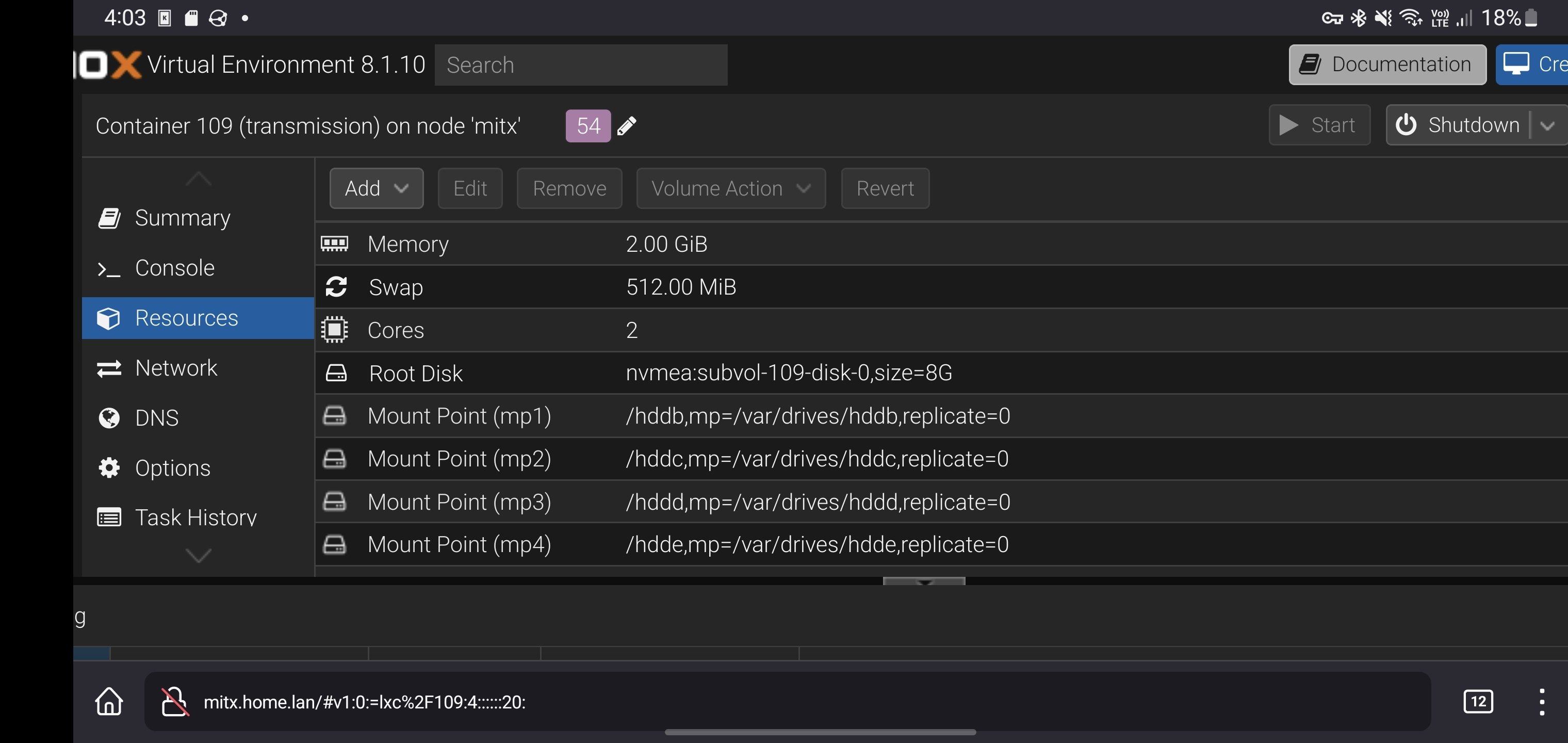

You could probably run OMV in an LXC and skip the overheads of a VM entirely. LXC are containers, you can just edit the config files for the containers on the host Proxmox and pass drives right through.

Your containers will need to be privileged, you can also clone a container and make it privileged if you have something setup already as unprivileged!

Yeah there is a workaround for using bind-mounts in Proxmox VMs: https://gist.github.com/Drallas/7e4a6f6f36610eeb0bbb5d011c8ca0be

If you wanted, and your drives are mounted to the Proxmox host (and not to a VM), try an LXC for the services you are running, if you require a VM then the above workaround would be recommended after backing up your data.

I’ve got my drives mounted in a container as shown here:

Basicboi config, but it’s quick and gets the job done.

I’d originally gone down the same route as you had with VMs and shares, but it’s was all too much after a while.

I’m almost rid of all my VMs, home assistant is currently the last package I’ve yet to migrate. Migrated my frigate to a docker container under nixos, tailscale exit node under nixos too while the vast majority of other packages are already in LXC.

Ahh the shouting from the rooftops wasn’t aimed at you, but the general group of people in similar threads. Lots of people shill tailscale as it’s a great service for nothing but there needs to be a level of caution with it too.

I’m quite new to the self hosting game myself, but services like tailscale which have so much insight / reach into our networks are something that in the end, should be self hosted.

If your using SMB locally between VMs maybe try proxmox, https//clan.lol/ is something I’m looking into to replace Proxmox down the line. I share bind-mounts currently between multiple LXC from the host Proxmox OS, configuration is pretty easy, and there are lots of tutorials online for getting started.

I still use it, the service is very handy (and passes the wife test for ease of use)

Probably some tinfoil hat level of paranoia, but it’s one of those situations where you aren’t in control of a major component of your network.

Tailscale is great, but it’s not something that should be shouted from the rooftops.

I use tailscale with nginx / pihole for my home services BUT there will be a point where the “free” tier of their service will be gutted / monetized and your once so free, private service won’t be so free.

Tailscale are SAAS (software as a service), once their venture capital funds look like their running dry, the funds will be coming from your data, limiting the service with a push to subscription models or a combination.

Nebula is one such alternative, headscale is another. Wire guard (which tailscale is based on) again is another.

I tried and bricked my S10+

Soft bricked, can probably recover but have not got the time to bring it back.